I wrote a piece for The Conversation on my work in psychophysics. You can read the published article here. Below is the original unedited draft I wrote for those interested.

Unedited Article

Outside of entertainment, virtual reality (VR) has seen significant uptake in more practical domains. For example, using VR to piece together parts of a car engine to test out a look and feel before the manufacturing process. Or to try on the latest fashion accessory before you buy. Our own recent work at Bath has applied VR to exercise; imagine going to the gym to take part in the Tour de France and race against the world’s top cyclists.

While VR has been successful, it is not without its kinks. Designing an interactive system does not stop at the hardware and software; the human must be factored in too. Perception is the term for how we take information from the world and build understanding from it. Our perception of reality is what we base our decisions on, and mostly determines our sense of presence in an environment.

So how to tackle the problem of designing VR systems that really transport us to new worlds with an acceptable sense of presence? As the scale of the problem grows, it becomes difficult to quantify the contribution each element of the experience makes to the person’s sense of presence. For example, when watching a 360 film in VR, it is difficult to determine if the on screen animations contribute more or less than the 360 audio technology deployed in the experience. What we need is a method for studying VR in a reductionist manner; removing the clutter then adding piece by piece to observe the effect each has in turn.

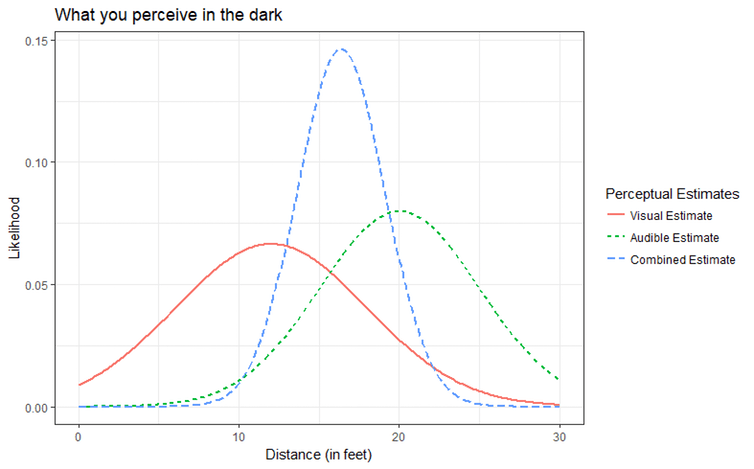

One theory blends together computer science and psychology: maximum likelihood estimation explains how we combine the information we receive across all our senses, integrating it together to inform our understanding of the environment. In its simplest form, it states that we combine sensory information in an optimal fashion; each sense contributes an estimate of the environment but it is noisy.

This scenario is depicted in the figure below which shows how the estimates from our eyes and ears combine to give an optimal estimate somewhere in the middle. Note how the blue curve is slimmer than the other two showing decreased variance; the combined estimate takes the best of both worlds. It is also positioned between the two sensory estimates, showing a compromise of the two. Finally, note it is taller: this corresponds to a higher likelihood in its estimate.

This has many applications in VR. Our recent work has applied this to solving a problem in VR with how people estimate distances. Imagine using a driving simulator for teaching people how to drive. If people compress distances in VR, then using it as a learning environment would be inappropriate.

Understanding how people integrate information from their senses is crucial to VR because it is not solely visual. Maximum Likelihood Estimation is a tool to model how effectively a VR system needs to render its multisensory environment in order to deliver the desired experience. Understanding perception will lead to more immersive VR experiences. It’s not a matter of separating each signal from the noise; it’s a matter of taking all signals with the noise to give the most likely result.

https://orcid.org/0000-0003-1169-2842

https://orcid.org/0000-0003-1169-2842